Whose Art is This? Training an AI to Make Fun Cat Images in My Art Style Using Open Art's PhotoBooth AI Workspace

AI image generation is a rapidly developing field. Currently a debate is going on about AI Art image generators being trained on practicing, professional artist works without their permission, allowing almost anyone to create images in those artist's styles without compensating the artists in any way at all. Not to mention, that most AI image generator output is generally defined, by the U.S. Copyright Office, Library of Congress, as in the 'public domain', able to be used commercially by anyone.

Technically that means if I try to sell an AI generated image as prints, anyone else can come along and take the exact same image and sell it as prints too. Legally it's very much a developing situation alongside the development of AI tools themselves, with online AI generators, such a Mage.space recently announcing a rewards program for AI model creators (an AI model is a general AI additionally trained on specific data sets, for example, many images from the same artist, to specifically generate new images in that artist's style).

Which gets me to the point of this article. As an artist myself, what excites me most is the possibility of creating an AI model of my own art style so I can generate my own AI images almost instantly in my style. I think that would be an incredibly useful tool. Specifically in the case of producing new art in a style I no longer produce actual physical artworks in any more.

Training an AI to Paint #TETCats# Style

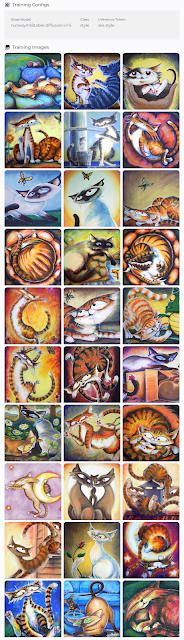

|

| Fig 1. The 30 Cat Artwork Images I trained my AI Model with. |

My cat artworks were also relatively successful sold as prints on RedBubble. This got me thinking, maybe there's an opportunity here to create new cat artworks in my style with AI to sell as prints.

I set about looking for an AI text to image tool that would allow me to train a model on my style. Eventually settling on Open Art's Photo Booth as an easy beginner's option. So easy, you could do this too. You can train it on any images not just art (train it on images of you so you can produce profile images of yourself in any art style, or doing anything you can imagine).

Training the AI

PhotoBooth uses a base model of Stable Diffusion 1.5 and allows you to create AI models trained on any set of 30-100 images depending on what pricing plan you choose.

Since this was my first time I went with the basic package with personalized photos for USD$10.00. This allowed me to train a single model with 30 images and gave me 400 credits (basically 400 image generations).

Open Art will keep your model for 30 days after which you can extend the time with a subscription. You can also download the model and use it with a local install of Stable Diffusion if you're technically minded and have a computer able to run it.

When you first set up your model you have the choice of four base models trained toward males, females, pets, and other. I figured pets would be better for me rather than other since my training images are stylized cats.

For my 30 images I chose a set of cat paintings from 2004-2007. Click the link to see the Flickr Album of the full images. Photo Booth requires you to crop all your images into a square. Fortunately you can do this quickly after uploading. I tried to choose the most important area of each image.

It takes about 30 minutes to an hour for your model to be ready. From there the system works like most other browser based AI text to image generators. To apply your model to the prompt you simply include the tag you set up, which in my case was '#TETCats# style'.

You also have various sliders you can adjust, which I'll get into as I go through my results.

The First Preset Generations

Once your model is ready to go you'll see a whole bunch of free, preset generations created with prompts that might be typical of the base model you chose - in my case 'Pets'.

While none of my preset generations were usable, as they were not typically based on activities I depict my cats performing, they were really fun to see, sometimes creepy, but mostly showed promise that the training understood my style to a degree.

Finding the Best Prompt (Highlights)

Obviously with 400 credits I can't show you every single image generation. Instead I'll run you through my thinking on my prompts and show a few of the best (and probably a few of the WTF is that images as well).

Early Prompts

The most common cat image I've painted is a ginger cat, crazily leaping from between flowers, trying to catch a butterfly. That was my first prompt (including the '#tetcats# style' tag that tells the AI to use your model): "A cat #tetcats# style, leaping from a field of flowers, trying to catch a butterfly."

For my early generations I went with the default AI settings and, for the whole time, I stuck with the standard 4 image generations for each prompt. If I got really promising results I clicked the option to generate 8 more images for the same prompt and settings.

Generally I didn't feel these generations captured the painterly texture of my work or the personality of the cats. To counter this I tried adding words to my prompt such as 'art', 'painting', 'warm colors', 'joyful, vivid color', and 'comical'. I even tried adding 'Van Gogh' to my prompt to see if I could literally get visible brush strokes into the image.

|

| Fig 3. The top left image is the best from my first four image generations before I started modifying the prompt with additional words. Spot the two Van Gogh image prompts! |

You Want Four Legs and ONE Tail?

You may have noticed in my early images some fairly deformed (or is that 'surreal') cats. I can assure you that cats with more multiple limbs and tails than a standard cat should have was very much the norm. As were deformed limbs, multiple eyes, and mouths, floating heads and more.

I figured maybe my initial prompt was asking a bit too much so I went back to basics with this prompt: "A cat with four legs, one tail #tetcats# style"

This prompt, I swear, made the AI look at me like I was some kind of crazy person as it proceeded to add more legs and tails to almost every image than any single (and sometimes two cats) could ever need, these generations were the most like something I would actually paint (if I was into painting mutilated cats).

AI. This is What a Cat Looks Like!

My next idea was to use Image to Image generation. This involves uploading a reference image and then telling the AI how much you want that image to influence the output. In Photo Booth you get a 'Strength' slider where 0 equals no influence, and 1 equals just hand me back the reference image as output.

|

| Fig 5. The image in the top left is my reference image of a kitten on a white background. The other five images are generations based upon it with varying Strength and CFG settings. |

At the same time I made an effort to learn more about other settings I could adjust like the CFG scale. This is kind of similar to the strength setting on the reference image but controls how much the AI will try to stick to your prompt. A value of 2 means 'what prompt?' A value of 30 means 'I'll do my best to incorporate as much of this prompt as I can but I hate you for micro managing my creativity - it'll look awful!'. I settled on a value of 15 (the default is 7) which is considered good for detailed prompts.

I also increased the number of steps the AI would take to reach a final image from the default of 25 to 50. 25 is usually fine but 50 can often result in a more refined image. More than 50 is considered overkill and of minimal benefit.

Photo Booth also has three different sampler models to choose from, DPM Solver++, ddim, and Euler A. Euler A results in a softer image, great for those fantasy style images, the other two, even Photo Booth says don't really have any significant difference. For whatever reason I felt ddim was better for the generations I was getting.

I feel the reference image I chose initially wasn't a good choice. While I was getting a very coherent interpretation of a cat, the white background was stifling my prompt when I again added flowers and butterflies into the mix.

Changing the Reference and the Prompt... Wow!

The photo you choose as reference can really have a dramatic effect on the output. I found an image that was of a real cat but more in the position I'd paint, if I was painting a cat leaping after a butterfly. As my reference image was more representative of this I changed my prompt to: "A painting of a cat, with a large head, leaping in the air, chasing a butterfly, in a field of flowers. Warm colors. #tetcats# style" (I did try minor variations of this prompt but the results weren't radically different).

I asked for a larger head because my cats tend to have larger heads and those in the previous generations were too small by comparison.

|

| Fig 6. Again my updated reference image is in the top left. I changed my prompt back to a cat leaping from flowers chasing a butterfly (or some variations of). |

At this point I'd also discovered a good example of a negative prompt - which is an optional additional prompt you can enter that lists things you don't want to see in your image. I used this negative prompt on every generation going forward: "duplicate, deformed, ugly, mutilated, disfigured, text, extra limbs, face cut, head cut, extra legs, poorly drawn face, mutation, cropped head, malformed limbs, mutated paws, long neck".

What I liked about these images was the flowers that were definitely in the style, but the cats were moving away from my style, and all the images looked too smooth. More like digital art than paintings.

I kept my new reference image but switched up my prompt to: "A happy tabby cat #tetcats# style leaping from flowers at a passing butterfly. Rough painting"

I will admit that I really liked some of the generated images but they looked nothing like my cats.

|

| Fig 7. While I really liked some of these generations they just weren't that close to my style at all. |

Okay, That's Not a TET Style Cat, This is a TET Style Cat

At this point I decided to abandon using a reference image in the hope that my negative prompt would be enough to pull the AI back from generating deformed 'Dali' cats.

Doing this saw the return of cats that actually had some resemblance to my style of cats but the images still looked too smooth. Too much like digital art. Plus my negative prompt didn't always suppress too many limbs and duplicate cats.

Whose Art is This?

By this point I had burned through more than half of my 400 credits and still hadn't quite seen anything that really looked like something I'd question as to whether it was me or an AI that had created it.

You can see in the previous set of images, the bottom three do have a little more of a painterly style as a result of adding the words 'impasto painting' into my prompt. While the images are clearly influenced by my art style I thought I'd see what would happen if I added the art style of some more famous artists, with similar styles to me, that I know Stable Diffusion will recognize.

If you want to try adding artist names to your prompts there's an entire list of all 1833 represented artists with AI output comparisons in Stable Diffusion you can search.

No, Really... Whose Art is This?

By this point I'd almost burned through my 400 credits. While I don't think I achieved any one image that I could say looks exactly like something I could have painted I did arrive at a look and style that is a blend of my style and another artist - as well as the impasto painting technique.

|

| Fig 10. The Race - Original Art by Josephine Wall |

I feel that last part is quite important as my TETCats style seems to survive really well when blended with her style. I like the blend in style so much I used up all my remaining credits on 76 more generations.

My final prompt: "A happy tabby cat #tetcats# style, leaping from flowers, attempting to catch a passing butterfly. Josephine Wall, impasto painting. Minimal warm bright background."

Overall I really like the combination of styles. While not every generation produces a great image - particularly when it comes to limb counts - the cats and flowers are clearly influenced by my art.

Josephine's style adds a lot more texture detail and shading than I would do, along with the choice of coloring used in the backgrounds. I tend to stick to one gradient of one or two colors.

Then there's whatever influence the words 'impasto painting' add into the mix since Josephine doesn't really have an impasto style.

The question is, genuinely, whose art is this?

|

| Fig 12. Left: Original TET Cats Art Acrylic Painting. Middle: AI TETCats/Josephine Wall Generation. Right: Original Josephine Wall Painting, The Race. |

Not only are these final images influenced by my own original art, I also curated the subject matter and the influences that would affect that subject matter, specifically referencing both the impasto painting technique and Josephine Wall's work.

Could I have arrived at a similar artwork through traditional means (actually researching Josephine's work and drawing and painting the final image)? Possibly but highly unlikely. Particularly because I haven't painted cats in years.

Plus there's no reason for me to have my work influenced by Josephine's if I'm actually painting the work myself, since I added her work's influence to try and emulate a more painterly style similar to my own.

Are My Images a Breach of Josephine's Copyright?

This is definitely not legal advice but I have studied copyright law enough to relatively confidently say 'no'. Whether Josephine consented to having her art included in Stable Diffusion's data set is another matter entirely.

On the face of comparing my AI Generations to her original art I'd say anyone would be hard pressed to say my images are a rip off of her style (you can't actually copyright a style either) or any of her specific artworks (artwork is definitely protected by copyright).

While there's no specific amount of an artwork you can 'copy' without being in breach of copyright it is up to a court to decide whether you've significantly 'transformed' a work to not be a 'copy' of an artwork. i.e. if someone looked at your work, would they immediately connect it back to the artwork you copied?

I think even Josephine Wall would be hard pressed to look at any of my AI generations influenced by her art and even recognize her work was an influence without being told.

It's All Public Domain Anyway So Who Cares?

USA Copyright Law currently defines AI Image generations as Public Domain. This could change in the future depending on how image generation evolves and whether artists receive any compensation whenever their name is used in a prompt. Time will tell - and it's not that simple.

I could, theoretically, train an AI model on every aspect of my art and only generate images using that data. That's all my intellectual property. If I want to feed my art through an AI filter, is it any less my property when it comes out the other end? Particularly if I own the AI model as well? Have I just made my IP public domain just because I used a computerized assistant to create the images?

How is that different from a professional artist hiring assistants to create the actual art? How is it different from a company owning the copyright on work they hired an artist to design and create for them?

It's Money on the Table for Artists

As much as artists are crying foul on their work being included in AI data sets without their permission, I think they'll soon by crying foul on all AI generations being considered public domain. AI image generation is too good a tool for modern artists to ignore altogether.

Being able to generate 400 high quality, all unique images, in a specific style in less than an hour, that's money on the table for artists everywhere. Whether it's saving time on brainstorming ideas, producing final artwork for selling prints, or something else? It's a massive time saver.

You're going to want to say, with confidence, this is your art, made with the assistance of AI. Without you those AI image generations you curated simply would not exist.

---o ---o--- o---

Answers to Fig 9 image artists from left to right, top to bottom: 1. Leonid Afremov | 2. Paul Cézanne | 3. Berthe Morisot | 4. Thomas Gainsborough | 5. Lovis Corinth | 6. Andrew Atroshenko | 7. Gaston Bussière | 8. Pixar | 9. Josephine Wall